What is virtualization?

virtualization is technology that lets you create useful IT services using resources that are traditionally bound to hardware. It allows you to use a physical machine’s full capacity by distributing its capabilities among many users or environments.

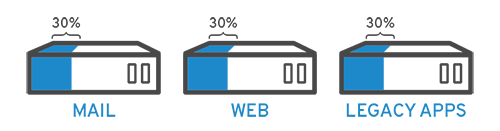

In more practical terms, imagine you have 3 physical servers with individual dedicated purposes. One is a mail server, another is a web server, and the last one runs internal legacy applications. Each server is being used at about 30% capacity—just a fraction of their running potential. But since the legacy apps remain important to your internal operations, you have to keep them and the third server that hosts them, right?

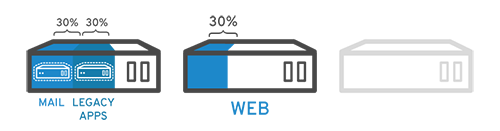

Traditionally, yes. It was often easier and more reliable to run individual tasks on individual servers: 1 server, 1 operating system, 1 task. It wasn’t easy to give 1 server multiple brains. But with virtualization, you can split the mail server into 2 unique ones that can handle independent tasks so the legacy apps can be migrated. It’s the same hardware, you’re just using more of it more efficiently.

A brief history of virtualization

While virtualization technology can be sourced back to the 1960s, it wasn’t widely adopted until the early 2000s. The technologies that enabled virtualization—like hypervisors—were developed decades ago to give multiple users simultaneous access to computers that performed batch processing. Batch processing was a popular computing style in the business sector that ran routine tasks thousands of times very quickly (like payroll).

But, over the next few decades, other solutions to the many users/single machine problem grew in popularity while virtualization didn’t. One of those other solutions was time-sharing, which isolated users within operating systems—inadvertently leading to other operating systems like UNIX, which eventually gave way to Linux®. All the while, virtualization remained a largely unadopted, niche technology.

Fast forward to the the 1990s. Most enterprises had physical servers and single-vendor IT stacks, which didn’t allow legacy apps to run on a different vendor’s hardware. As companies updated their IT environments with less-expensive commodity servers, operating systems, and applications from a variety of vendors, they were bound to underused physical hardware—each server could only run 1 vendor-specific task.

This is where virtualization really took off. It was the natural solution to 2 problems: companies could partition their servers and run legacy apps on multiple operating system types and versions. Servers started being used more efficiently (or not at all), thereby reducing the costs associated with purchase, set up, cooling, and maintenance.

Comments